Is artificial intelligence better suited than humans to keeping our networks safe?

October 4, 2018

Keeping our networks secure from hackers is becoming too big a job for humans. The increasing complexity of networks, much of which is coming hand-in-hand with the expansion of the IoT—not to mention a dearth of available talent�—is only pointing to one conclusion: Attacks and security breaches will only get more severe as more devices and data are brought online.

Just this month, Facebook fell victim to a network attack that exposed the personal information of 50 million of its users. The same week as the Facebook breach, ridesharing company Uber was fined $148 million for failing to disclose a 2016 breach that exposed personal data, including driver's license information, for roughly 600,000 of Uber's drivers as well as information on 57 million Uber mobile app users. Uber tried to cover up the breach and paid the hackers a $100,000 ransom in 2017 for the stolen data to be destroyed.

Regarding the Uber hack, Ankur Laroia, a security data management expert and strategy solutions leader at Alfresco (an enterprise software company), told Design News that breaches of this level are going to become the new normal and will also be very costly. A recent study by the Ponemon Institute, commissioned by IBM, estimated the average cost of a data breach to be $3.86 million in 2018—a 6.4 percent increase from 2017.

“Securing applications as well as computing infrastructure is a shared responsibility between those that make, deploy, and maintain these systems,” Laroia said. “Over time, our ability to remain vigilant has waned, and our attention to keeping our house tidy and in order has also dwindled significantly. We must do more than secure the firewall; we have to properly identify, tag, and curate information and ultimately dispose of it when it isn’t of value or per statutes.”

An AI Arms Race

“Why is it so relatively easy to hack both low- and high-end enterprises?,” asked Adi Ashkenazy, VP of product at XM Cyber, an Isaeli cybersecurity company. “It's because it's really, really hard to understand your existing security culture. What are the existing potential attack paths toward your critical assets?”

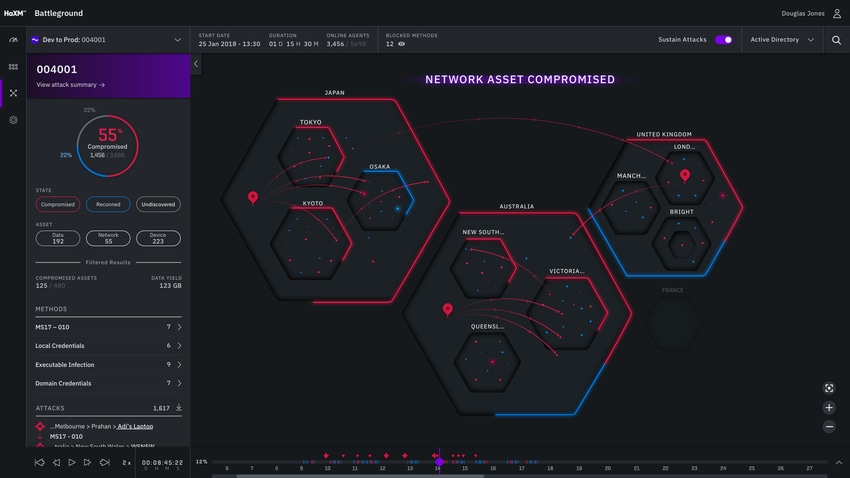

XM Cyber is among a number of companies leveraging a new tool in the battle over cybersecurity: artificial intelligence. HaXM, a simulation platform developed by XM Cyber, uses AI bots to continuously simulate network attacks and also provide actions and remediations against security holes and exploits.

Speaking with Design News, Ashkenazy said the sophistication of today's systems is making cybersecurity too arduous of a task for humans to manage alone. “If you look at a modern enterprises, you're talking hundreds or thousands of endpoints in the cloud and otherwise,” he said. “As a defender, you're expected to keep track of all of this—checking for needed patches, vulnerabilities, and even human mistakes. And you realize this is not a task for a human being.”

RELATED ARTICLES:

Ashkenazy is not alone in this assessment. UK startup Darktrace uses a machine learning solution to monitor devices and users on networks, learning and seeking out normal and suspicious activity and acting accordingly. In a recent interview with Bloomberg, Nicole Egan, CEO of Darktrace, talked about the growing need for sophisticated AI tools to counteract similar measures being taken by hackers themselves, where organized cyber crime rings are beginning to use AI algorithms in cyberattacks. “This is fast becoming a war of algorithms—it's going to be machine learning against machine learning; AI against AI,” Egan told Bloomberg.

Companies like San Jose-based SafeRide are leveraging AI specifically for securing connected and autonomous vehicles. The company's vSentry product suite for private and fleet vehicles uses AI to monitor both in-vehicle systems as well as connected vehicle networks for malicious activity. In June of this year, Saferide announced a partnership with Singapore-based ST Engineering to integrate vSentry into ST Engineering's hardware platforms for electric and autonomous vehicles.

XM Cyber's Ashkenazy believes it's important for companies to adopt AI tools now—before they become even more widely available to hackers. “The way I look at this is hackers, at the end of the day, have limited resources and are economically constrained. So they're trying to be cost effective with what they do. Hackers do not have a marketing department...They will use anything and everything as long as it makes economic sense,” he said. “The use of AI will start making sense when we have a large number of AI-based security controls that will need to be defeated. If you're a hacker today, do you really have to defeat that many controls based on AI? Not really.”

The Human Condition

Ashkenazy continued, “Unfortunately, sometimes you can trust a machine. Human mistakes result in networks being compromised. And this will be an area where machines will replace humans quite fast.”

A typical cybersecurity simulation will involve humans working in two teams: a “red team” tasked with attacking a network, and a “blue team” charged with stopping the red team and plugging any security holes. “If you're a manufacturing company working with 300 suppliers, you may be concerned about a supply chain attack, for example,” Ashkenazy said. “What happens if someone starts at the server, where suppliers are connecting? Can they reach critical assets, such as product designs and diagrams, from that point?”

Checking all of this can be grueling work. And when both teams come from the same security company, it can even create unintentional human biases. One team may not want to make the other look too bad and thus miss out on fixing a critical issue.

XM Cyber's solution is to let AI act as a “purple team:” a combination of both red and blue. “What we decided to do was build an automated hacking machine,” Ashkenazy said. “The goal for this machine is to be scalable enough to work in large environments—continuous and very safe for production environments...As it attacks, it's collecting information. This results in a very tight list of issues that the blue team will be using to evolve its network.”

Every time a security control is added to a system, it also adds inherent risks since that system itself may have unknown vulnerabilities. This is where many see the most opportunity for AI. Similar to how AI is being touted for being able to take human workers out of repetitive, tedious factory work, it's being credited as doing the same for cybersecurity experts—thus allowing them to focus on higher level functions and needs.

Of course, with any AI system, there's always a matter of training. AI hasn't evolved to the point where it can be creative enough to devise cyberattacks on its own. And for XM Cyber, this is where the human element should still come into play. Let cybersecurity experts discover clever attacks and let the AI go about the work of trying to implement them. Ashkenazy said the core of XM Cyber's HaXM system is still an expert system with deep learning AI being used for niche activities like password guessing or trying to understand the value of a target. “We feed it manually through our research team with new categories and types of attacks,” he explained.

The day may never come when humans can be removed entirely from the cybersecurity equation. But as AI technologies become more widely accessible and affordable for benevolent and malicious parties alike, humans aren't going to be able to win the battle alone.

“We're not solving security. There's no drop-the-mic moment where we're done, " Ashkenazy said. "I don't tell people to fire their entire red team. They use [AI] to help them concentrate on what they do best. Humans find new attacking methods and they feed it back into the machine. They keep finding new methods, and the machine scales it to the entire organization and checks it continuously so the humans don't have to."

Chris Wiltz is formerly a Senior Editor at Design News covering emerging technologies including AI, VR/AR, and robotics.

About the Author(s)

You May Also Like